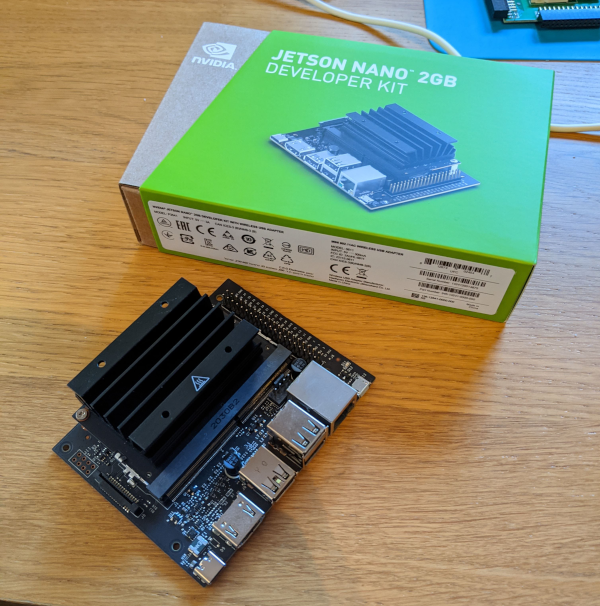

Now that I have the Jetson Nano all setup in a case, I wanted to test out a how well the GPU works on some simple problems. This post looks at my first test which looks at a simple method of calculating Pi, and looks at how quickly is can carry out those calculations.

This method of calculating Pi is oftern refered to as the dart board method. This method relies on placing random number onto a square and then determining if that random number falls within a circle with a diameter that is the same size as the box or in the area outside of the circle.

Image from wikipedia

By selecting two random numbers with are evenly distributed between a min and max, to be the x and Y coordinates, we can then calculate the distance from the centre of the circle using pythagorus theorem.

Where our distance from the centre from the cicrle is c, and our randomly selected x and y co-ordinates a and b. This means that our distance from the centre of the circle is given by:

The next step is to see if this point falls within the circle, or in the area outside the circle. this is completed by comparing this distance from the centre of the circle with the radius of the circle. If this distance is less than the circle radius, then it is within the circle, and we count up how many times we get a value that falls inside the circle.

We can the use the ratio of the number of points that we have found within the circle and the total number of test completed to approximate the value of pi. The area of the circle is defined as:

The area of the square is twice the radius of the circle, for each the x and y axis we have used for generating all our points is, therefor the area is

We can therefore calculate that the ratio in the area between the circle and the square is:

This can be simplified to:

Assuming that we have a sufficiently large number of points we can assume that the number of points which fall inside the circle is proportional to the total area of the square, so after rearranging we can then replace the areas with the number of points that fall within each:

This formula, with is relatively simple can allow us to compute the value of pi, which can be realised with python using numpy. The implementation, generates a random number between a width, and then calculates the distance from the centre, that this point is from the centre of the circle. This is then compared to the circles radius, and if it falls within the circle the total is implemented. The final value of Pi is then calculated when the main loop is finished.

import numpy as np

import time

iterations = int(1e6)

totalIn = 0.0

width = np.power(2,15)

start = time.time()

for iter in range(iterations):

x = np.random.random_integers(-width,width)

y = np.random.random_integers(-width,width)

dist = np.sqrt(np.power(x,2)+np.power(y,2))

if dist<width:

totalIn += 1

my_pi = 4 * np.divide(totalIn,iterations)

runtime = time.time() - start

print("Pi is calculated as : %.10f" % my_pi)

print(runtime)

The accuracy of the value of Pi is highly linked to the number of iterations that are completed, as well as the number of bits used to calculate the final value. By varying the number of points we calculate with we can see the error reducing, the table bellow is for the code above, run on a Jetson Nano 2Gb.

| Iterations | Pi Calculated | Run Time(s) |

|---|---|---|

| 100 | 3.0000000000 | 0.077 |

| 1,000 | 3.0760000000 | 0.692 |

| 10,000 | 3.1360000000 | 7.14 |

| 100,000 | 3.1518800000 | 69.6 |

| 1,000,000 | 3.1409400000 | 686 |

The main thing we have control of is the number of iterations, and we can attempt to complete more iterations by optimising our code, the problem is the more iterations we do the longer is takes to complete, with 1,000,000 iterations taking 10 minutes we need to improve the execution time if we want to carry out more iterations.

In Python Loops are relatively slow compared to other languages such as ‘c’ and ‘c++’, this is due to the dynamically typed nature of python. Vectorising the calculation of the values, using Numpy helps to remove or limit the number of loops that need to be completed, and operations on vectors are generally more efficient in dynamically typed languages, and by avoiding context switching large efficiency gains can be found.

import numpy as np

import time

test_points = int(1e9)

sample_size = int(1e6)

iterations = int(test_points/sample_size)*sample_size

count = np.array(0,dtype=np.float64)

width = np.power(2,15)

start = time.time()

max_value = np.array(width*width,dtype=np.float32)

for iter in range(int(iterations/sample_size)):

x = np.random.random_integers(-width,width,sample_size)

y = np.random.random_integers(-width,width,sample_size)

result = np.less(np.add(x*x,y*y),max_value)

count = count + np.sum(result)

pi_2 = 4.0 * np.divide(count,iterations)

runtime = time.time() - start

print("Pi is calculated as : %.10f" % pi_2)

print("Runs: %i" % iterations)

print(runtime)

This approach gives a significant improvement, we are now able to do the number of calculations that took over 10 minutes, in less than a second.

| Iterations | Pi value | Run Time (s) |

|---|---|---|

| 1,000,000 | 3.1449280000 | 0.28814268112182617 |

| 10,000,000 | 3.1422772000 | 2.569852352142334 |

| 100,000,000 | 3.1415957200 | 26.24626588821411 |

Now making use of the unique of the Nvidia Jetson boards which is the GPU along with the 128 CUDA cores. Taking advantage of these features involves switching from Numpy which executes on arm core to using CUPY. The CUPY library is designed to be compatible with numpy, using the same syntax and function names but to be used to execute the commands on the GPU.

While the syntax is almost identical between Numpy and CUPY, for the functions themselves, we actually need to deal with where the variables are located for the GPU version. Typically on a traditional Desktop PC the memory for the CPU and GPU are separate, and each time a variable needs to go between the two it has to be transferred over what is typically a PCIe link. This transfer takes time, but in the Jetson Boards the memory is shared between the CPU and the GPU, which means transfers are no longer a real issue but we still have the map the variables as ones that are able to be processed by the GPU as shown by the ‘cp.array()’ commands, and then make that data available to Numpy and the CPU with commands such as ‘cp.asnumpy()’.

import numpy as np

import cupy as cp

import time

test_points = int(1e9)

sample_size = int(1e6)

iterations = int(test_points/sample_size)*sample_size

count = cp.array(0,dtype=cp.float64)

width = cp.power(2,15)

start = time.time()

max_value = cp.array(width*width,dtype=cp.float32)

for iter in range(int(iterations/sample_size)):

x = cp.random.random_integers(-width,width,sample_size)

y = cp.random.random_integers(-width,width,sample_size)

result = cp.less(cp.add(x*x,y*y),max_value)

count = count + cp.sum(result)

pi_2 = cp.asnumpy(4.0 * cp.divide(count,iterations))

runtime = time.time() - start

print("Pi is calculated as : %.10f" % pi_2)

print("Runs: %i" % iterations)

print(runtime)

Apart from these memory management changes that code is broadly the same, so with these minor adjustment to make use of the GPU we can now get a roughly 5 times improvement in speed compared to the vectorised approach. The originally looped code took over 10 minutes to calculate one million iterations, which are now completed in just over half a second.

| Iterations | Pi value | Run Time (s) |

|---|---|---|

| 1,000,000 | 3.1428120000 | 0.536 |

| 10,000,000 | 3.1412428000 | 0.952 |

| 100,000,000 | 3.1414888800 | 5.70 |

| 1,000,000,000 | 3.1414574560 | 48.8 |

These large improvements in speed, have allowed us to complete far more iterations in a shorter amount of time, and in terms of out algorithm we can see the improvements in the accuracy of Pi we are estimating. By the time we are calculating 1e9 values, we are getting pi correct to 4 significant figures. The question is are there any more options to improve the speed of our algorithm?

One element that we skipped over is the size of the vectors that we are working with, for the above testing they were fixed at 1e6, without any real justification. This number was picked as an integer divide of the number of points was planning to test with, but could this be optimised, lets test a few Vector lengths with a fixed number of iterations at 1e8.

| Vector Length | CPU Run Time (s) | GPU Run Time |

|---|---|---|

| 1e3 | 32.9 | 846 |

| 2e3 | 21.3 | 420 |

| 5e3 | 14.4 | 176 |

| 1e4 | 12.0 | 82.8 |

| 2e4 | 11.7 | 44.2 |

| 5e4 | 12.2 | 19.2 |

| 1e5 | 12.0 | 10.9 |

| 2e5 | 11.5 | 6.90 |

| 5e5 | 10.7 | 5.60 |

| 1e6 | 10.4 | 5.18 |

| 2e6 | 10.2 | 5.01 |

| 5e6 | 10.8 | 4.94 |

| 1e7 | 10.6 | 5.04 |

| 2e7 | 10.6 | 5.45 |

| 2.5e7 | 10.6 | 6.62 |

What we see is that the Run time for the CPU and GPU both hit plateau, with the GPU implementation twice as as the CPU for large vectors. In contrast we also find that for small vector sizes the CPU implementation is significantly fast than the GPU. The vector length of 2.5e7, was the largest size that could be fit in the memory of the Jetson (vectors bigger than this caused failed out of memory errors).

This is shown that the Jetson Nano can extract some more performance, by making use of the CUPY library to leverage the GPU has given x2 speed up over the CPU vectorised code. The x10000 time speed up from the original looping code, shows that the structure of the algorithm needs to be optimised, before assessing the before on the hardware in question.